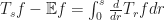

Recently we posted a paper on arXiv “Rademacher type and Enflo type coincide” with Ramon van Handel and Alexander Volberg solving an old problem in Banach space theory. The question is to understand under what conditions on a Banach space we have dimension free Poincare type inequality

for all and all

. Here,

is some universal constant (independent of

and the dimension

of the hypercube

),

is uniformly distributed on the Hamming cube

, and

.

Remark: one can ask the validity of (1) with an arbitrary power

, i.e.,

instead of

. Notice that if

then the “triangle inequality”

together with telescoping sum

trivially implies (1) for any normed space

.

Also notice that if

then testing (1) on

for some

implies

.

But by the central limit theorem,

i.e.,for sufficiently large

which is impossible when

. Thus no normed space satisfies (1) for

.

Therefore, (1) makes nontrivial sense only for. Since the solution of the problem that I am going to present does not see any difference between

or

we will be working only with the case

.

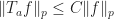

It was conjectured that (1) holds for all if and only if (1) holds for linear functions only, i.e.,

. When

is linear the inequality (1) takes the form

and such Banach spaces which satisfy (2) for all are called of type-2 (Rademacher type-2). The Banach spaces which satisfy (1) for all

are called of Enflo type-2. Enflo type implies Rademacher type. What about the converse?

Banach spaces of type-2 have several key properties which I won’t have time to discuss today. An interesting part about Enflo type is that it can be written for an arbitrary metric space without using any linear structure as in (2).

Definition.

We say that a metric spacehas Enflo type-2 if

holds for all

and all

with some universal constant

.

The hope was that maybe Enflo type is the “correct” extension of Rademacher type for metric spaces, and if so then it could be that many good properties that are obtained for Banach spaces under sole assumption of being of Rademacher type can be “transferred” to metric spaces having Enflo type. So this would create a “bridge” between Banach spaces and metric spaces (the subject of “Ribe program”), and even more, one may transfer some techniques from Banach spaces to metric spaces. Therefore, the first open question was whether Enflo type = Rademacher type for Banach spaces.

Let me mention some partial progress.

Theorem 1. (Bourgain–Milman–Wolfson, 1986).

Rademaher type 2 implies Enflo type-2 with constantfor any

.

In other words Theorem-1 says that if holds for linear functions with some universal constant

, then for any

, we have

holds for all functions

. We really want to take

but the problem is that

becomes infinity at

.

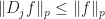

Theorem 2. (Pisier, 1986) If Banach space has Rademacher type -2 then

holds for all

, i.e.,

has Enflo type-q for any

.

The constant becomes infinity at

. Pisier was interested in the inequality

where in the right hand side the expectation is taken with respect to independent identically distributed uniform

. If we would’ve had such inequality then (using Rademacher type -2) we could write

and this would give us Enflo type-2 (using an obvious inequality )

But the problem was that Pisier proved his inequality with logarithmic term, i.e., ,

This gives Enflo type -2 with constant . One may hope to remove

in Pisier’s inequality (3) but unfortunately this not possible.

Theorem 3.(Talagrand, 1993) There exists a Banach space

for which

factor in Pisier’s inequality (3) is optimal.

Talagrand’s example is kind of mysterious in a sense that it reminds me when a magician pulls a rabbit out of a hat. But is it really so? I am planning to talk about it in another post.

Important partial results have been obtained under stronger assumptions than Rademacher type 2: and Rademacher type imply Enflo type (Naor–Schechtman);

for

, and Rademacher type for

imply Enflo type (Naor–Hytonen); Rademacher type implies Scaled Enflo type (Mendel–Naor); relaxed UMD property and Rademacher type imply Enflo type (A. Eskenazis); superreflexivity and Rademacher type imply Enflo type with constant

for some

(Naor–Eskenazis).

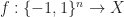

We are going to prove

Theorem: Rademacher type and Enflo type coincide.

Proof. Assume is of Rademacher type 2, i.e., we have

with some universal constant. Due to an obvious inequality

we have

. Thus it suffices to prove the Poincare inequality

Any function we can decompose into Fouirer–Walsh series

.

Next, consider the Hermite operator

Notice that , and

. Therefore, we can interpolate

.

Next, we have .

If we define the laplacian as then, obviously,

. Thus by linearity we have

. It is not hard to see that for any

, where

is dual to

we have integration by parts formula

. Therefore, if we let

we obtain

Next, let us have a close look at . Define i.i.d. random variables

for any , and all

. Let

, and let

. It is clear that

just because

, and hence

. Next, let us calculate

. We have

Therefore

where . Therefore we can proceed as

where we used the fact that .

Thus at this moment for any Banach space we have proved

(the reader should compare it to Pisier’s inequality (3)).

Now it is a simple application of a standard symmetrization technique to complete the proof of the theorem from (5). Indeed, let be independent copies of

correspondingly. Let

. Notice that

.

Therefore, by convexity we have

Thus

And the calculus shows

This finishes the proof of the theorem .

UPDATE 4.2.2021:

There seems to be an interesting historical background about this problem, see, for example, Per Enflo’s blog. See also this blog for a very nice exposition about Enflo’s problem. Here is the recorded talk I gave at Corona seminar.

Dear Paata,

Thank you very much for the amazing paper and for the post! Quick question: is it easy to see whether your dimension-free Poincare-type inequality implies Pisier’s (log n)-factor inequality, which has Rademachers instead of your “standardized biased Rademachers”?

LikeLike

Dear Anon,

Thank you for the question, this was also mentioned to me by Alexandros Eskenazis. Pisier’s inequality can be obtained from this argument by integrating over the interval![[0,s]](https://s0.wp.com/latex.php?latex=%5B0%2Cs%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) instead of

instead of ![[0,1]](https://s0.wp.com/latex.php?latex=%5B0%2C1%5D&bg=ffffff&fg=1a1a1a&s=0&c=20201002) where

where  . Indeed, starting with

. Indeed, starting with  the inequality (5) takes the form (here

the inequality (5) takes the form (here  )

)  . Now the integrand in the right hand side by Kahan’s contraction principle can be estimated as

. Now the integrand in the right hand side by Kahan’s contraction principle can be estimated as  where

where  are independent copies for

are independent copies for  . On the other hand

. On the other hand  , here

, here  is independent copy of

is independent copy of  . So

. So  . In the left hand side we can assume without loss of generality that

. In the left hand side we can assume without loss of generality that  . The claim is that

. The claim is that  with some universal constant. Indeed, It suffices to show

with some universal constant. Indeed, It suffices to show  where

where  . Since

. Since  therefore by several application of triangle inequality

therefore by several application of triangle inequality  . Thus

. Thus  .

.

LikeLike

Thank you so much for your response, this is great! Despite this last bit, this seems to give a slightly cleaner proof than Pisier’s original argument

LikeLike

Hi,

Thanks for the nice exposition. I became aware of this wonderful work through Ramon’s talk last week and it is really nice knowing this. I was trying to think how much can one extend the proof of Poincare inequality for real-valued functions which proceeds more easily by just using the orthonormality of the Fourier-Walsh series and the explicit expressions for the difference operators. It seems this works well for Hilbert spaces as well. Is that right ?

LikeLiked by 1 person

Dear Yogesh,

That is right. As long as the norm is defined in terms of an inner product

is defined in terms of an inner product  the standard proof of Poincare inequality

the standard proof of Poincare inequality  , i.e., opening the parentheses and using orthogonality, works well. In particular, this covers Hilbert space valued functions

, i.e., opening the parentheses and using orthogonality, works well. In particular, this covers Hilbert space valued functions  . When we have no information about the norm

. When we have no information about the norm  (only the fact that it satisfies triangle inequality) then this “orthogonality” approach does not work anymore, and one has to invent some new techniques. As one can see from the proof (Rademacher VS Enflo) in general case the Poincare inequality holds for all

(only the fact that it satisfies triangle inequality) then this “orthogonality” approach does not work anymore, and one has to invent some new techniques. As one can see from the proof (Rademacher VS Enflo) in general case the Poincare inequality holds for all  if and only if

if and only if  has Rademacher type 2, i.e.,

has Rademacher type 2, i.e.,  . Verification of Rademacher type-2 for Hilbert space is easy, we just open the parentheses and use orthogonality, and in general spaces

. Verification of Rademacher type-2 for Hilbert space is easy, we just open the parentheses and use orthogonality, and in general spaces  , other than Hilbert, one needs to invoke some other arguments.

, other than Hilbert, one needs to invoke some other arguments.

I also want to mention that for Hilbert space valued functions we have the identity , and sometimes one writes the Poincare inequality as follows

, and sometimes one writes the Poincare inequality as follows  . However, it is not difficult to show (using induction on the dimension of the Hamming cube

. However, it is not difficult to show (using induction on the dimension of the Hamming cube  ) that the “second version” of Poincare inequality holds for Banach space valued functions

) that the “second version” of Poincare inequality holds for Banach space valued functions  if and only if the space

if and only if the space  is 2-uniformly smooth, i.e.,

is 2-uniformly smooth, i.e.,  holds for all

holds for all  . Now 2-smoothness property implies Rademacher type -2 but not the vise versa. So as long as we change the question a little bit which on the level of Hilbert spaces does not see any difference, the answer can change for Banach spaces.

. Now 2-smoothness property implies Rademacher type -2 but not the vise versa. So as long as we change the question a little bit which on the level of Hilbert spaces does not see any difference, the answer can change for Banach spaces.

LikeLiked by 1 person

Dear Paata, Thanks a lot for the detailed explanation and additional remarks. This was very helpful.

LikeLike

Dear Paata:

I just came across this post. It is fantastic and super-easy to read. Thank you very much.

Jose

LikeLiked by 1 person